Prediction Results¶

When a model finishes training, click on the model to see the results.

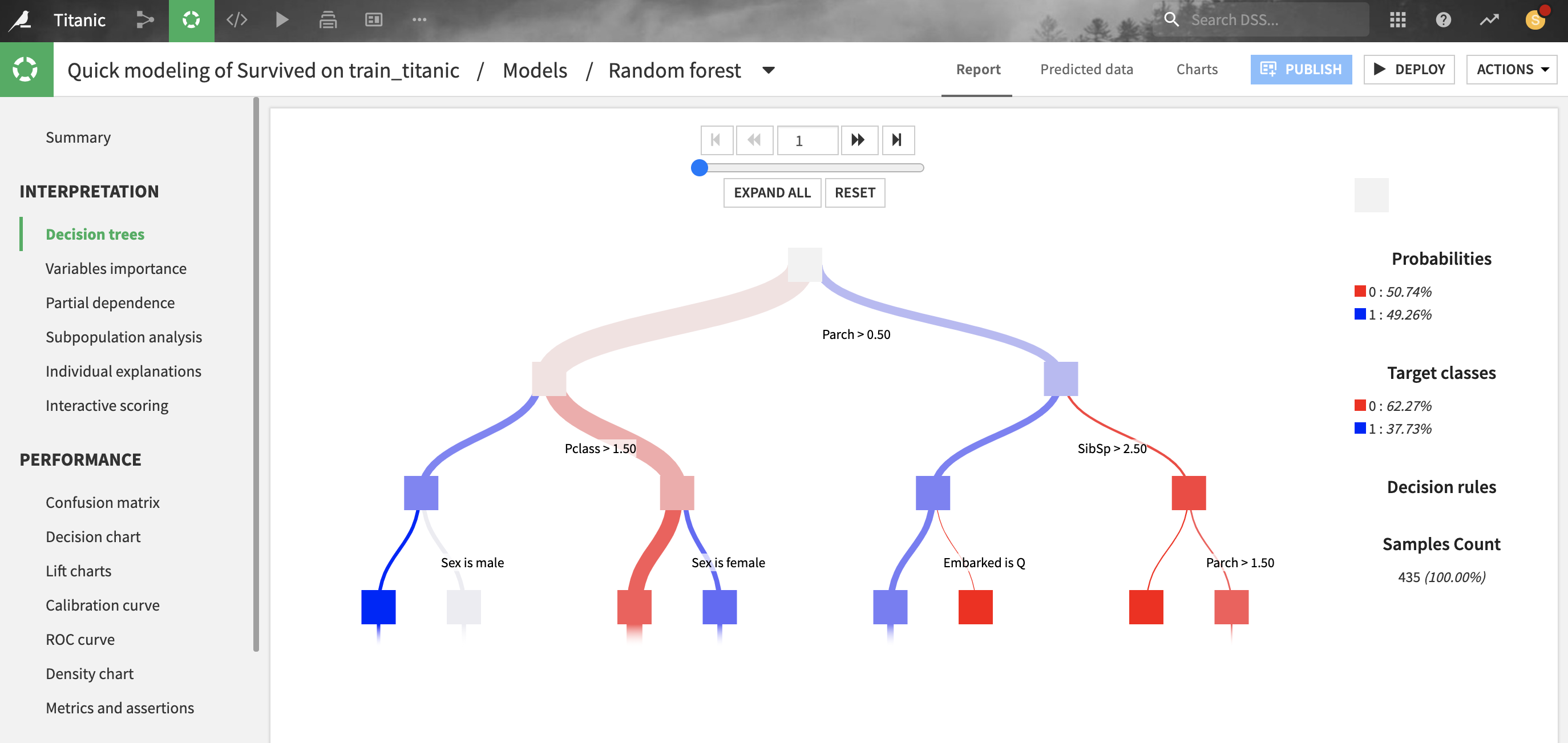

Decision tree analysis¶

For tree-based scikit-learn models, Dataiku can display the decision trees underlying the model. This can be useful to inspect a tree-based model and better understand how its predictions are computed.

For classification trees, the “target classes proportions” describe the raw distribution of classes at each node of the decision tree, whereas the “probability” represents what would be predicted if the node were a leaf. There will be a difference when the “class weights” weighting strategy is chosen (which is the case by default): the classifier will predict the weighted proportion of classes, so “probabilities” with class weights are always based on a balanced reweighting of the data. Whereas “target classes proportions” are simply the raw proportion of classes and will be just as unbalanced as the training data.

For gradient boosted trees, note that the decision trees are inherently hard to interpret, especially for classifications:

by construction, each tree is built as to correct the sum of the trees built before, and is thus hard to interpret in isolation

classification models are actually composed of regression trees, over the gradient of the loss of a binary classification (even for multiclass where one vs. all strategy is used)

for classifications, each tree prediction is homogenous to a log-odds and not a probability and thus can take arbitrary numerical values outside of the (0, 1) range.

All parameters displayed in this screen are computed from the data collected during the training of the decision trees, so they are entirely based on the train set.

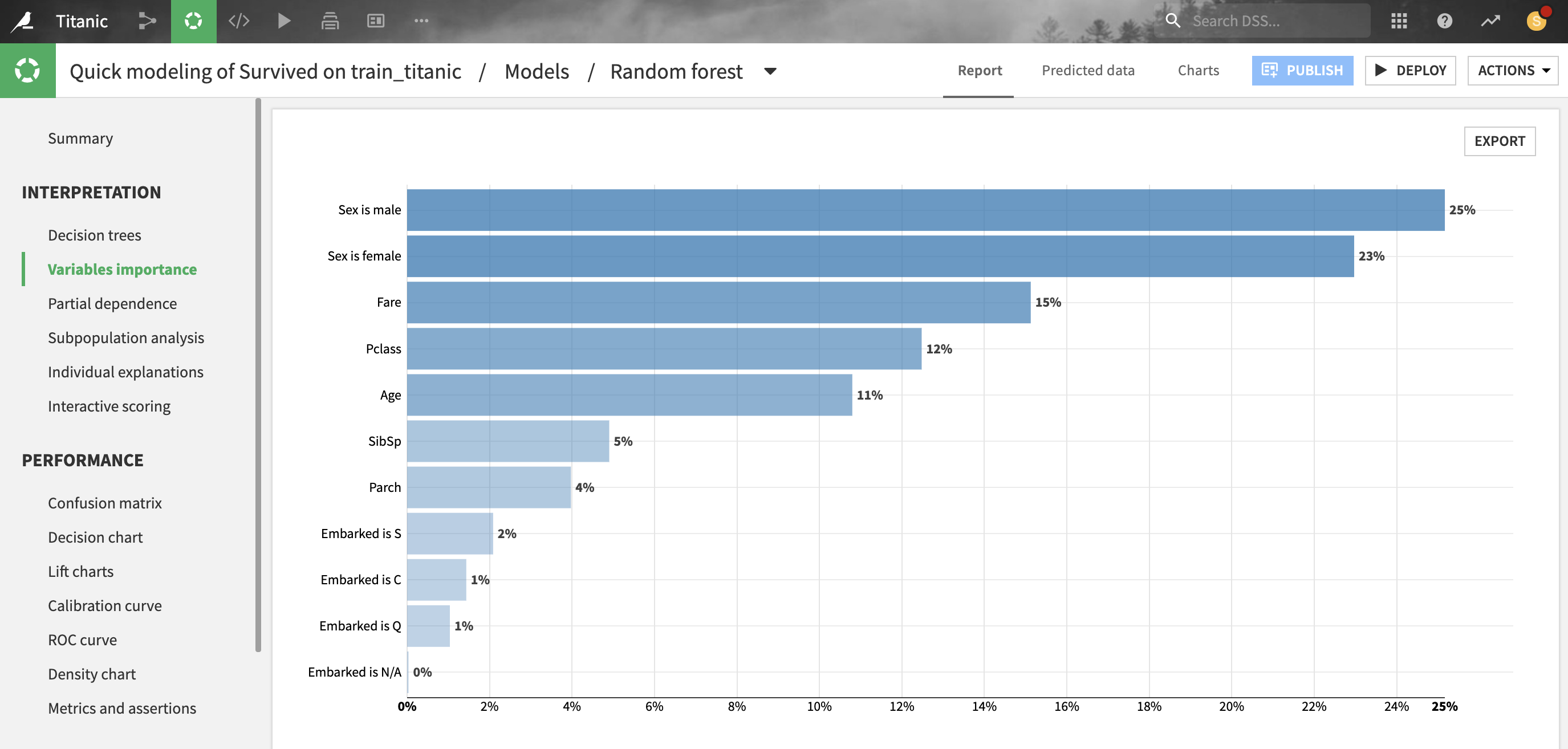

Feature importance and Regression coefficients¶

For most models trained in Python (e.g. scikit-learn, LightGBM, XGBoost), Dataiku provides estimations of how much a prediction changes with a change in each feature.

For tree-based scikit-learn, LightGBM and XGBoost models, “feature importances” are computed as the (normalized) total reduction of the splitting criterion brought by that feature (also called Gini importance).

Importance is an intrinsic measure of how much each feature weighs in the trained model and thus is always positive and always sum up to one, regardless of the accuracy of the model. Importance reflects both how often the feature is selected for splitting, and how much the prediction changes when the feature does. Note that such a definition of feature importance tends to overemphasize numerical features with many different values.

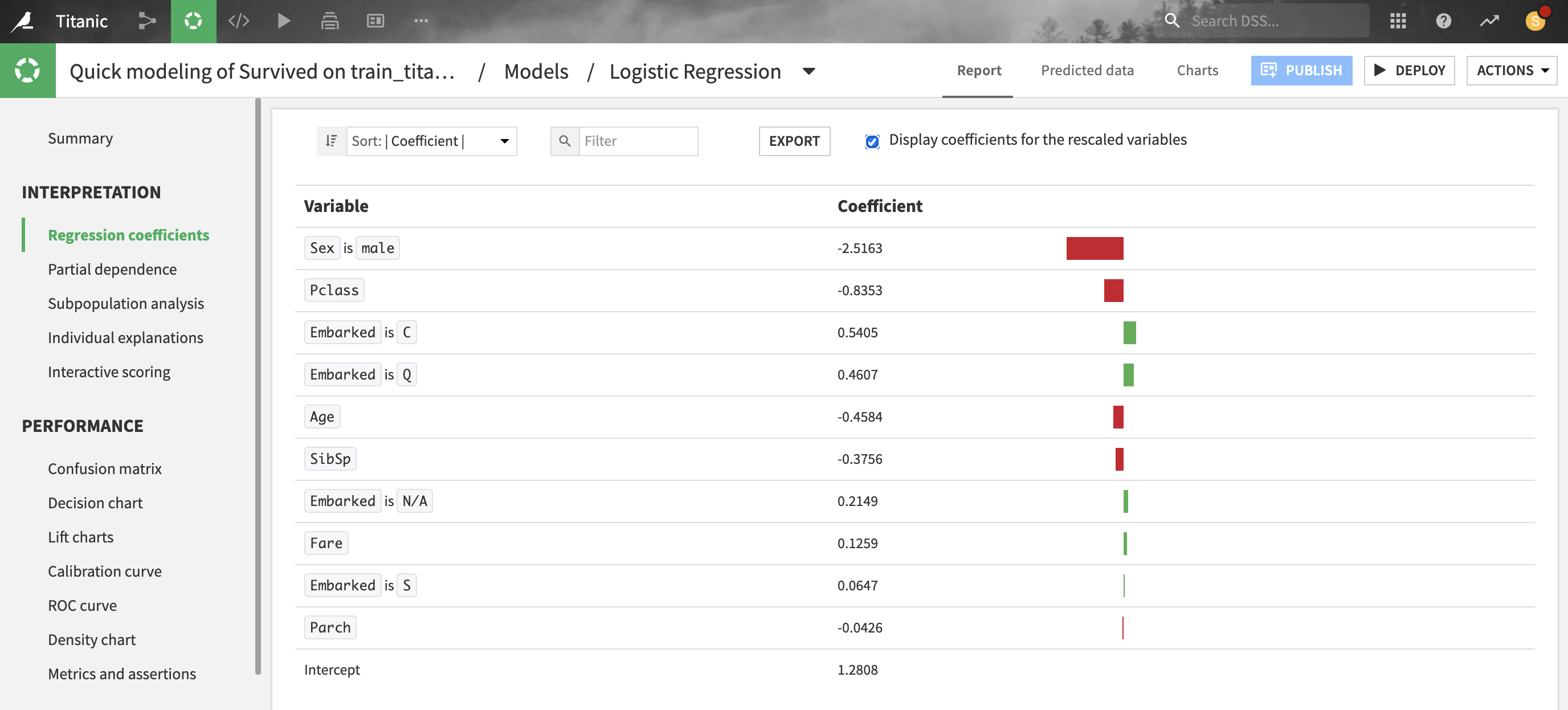

For linear models, Dataiku provides the coefficient of each feature.

Note that for numerical features, it is recommended to consider the coefficients of rescaled variables (i.e. preprocessed features with null mean and unit variance) before comparing these coefficients with each other.

All parameters displayed in these screens are computed from the data collected during the training, so they are entirely based on the train set.

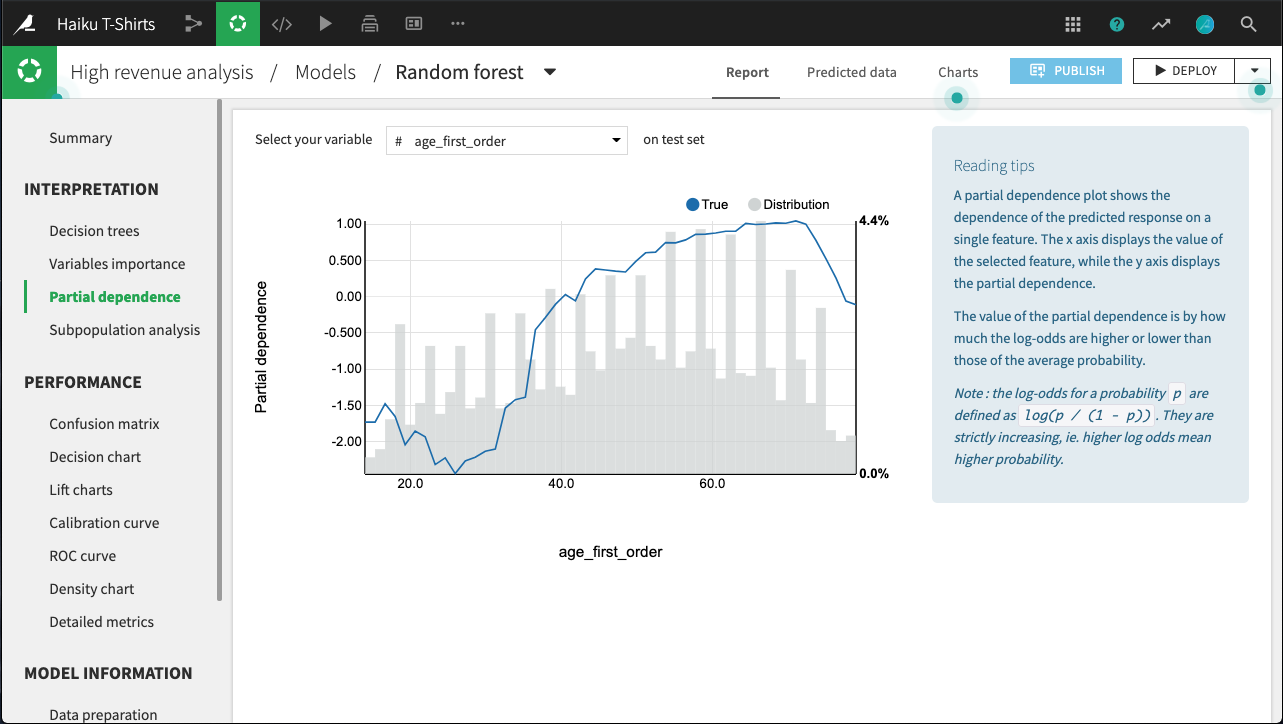

Partial dependence¶

For all models trained in Python (e.g., scikit-learn, keras, custom models), Dataiku can compute and display partial dependence plots. These plots can help you to understand the relationship between a feature and the target.

Partial dependence plots are a post-hoc analysis that can be computed after a model is built. Since they can be expensive to produce when there are many features, you need to explicitly request these plots on the Partial dependence page of the output.

For example, in the figure below, all else held equal, age_first_order under 40 will yield predictions below the average prediction. The relationship appears to be roughly parabolic with a minimum somewhere between ages 25 and 30. After age 40, the relationship is slowly increasing, until a precipitous dropoff in late age.

Note that for classifications, the predictions used to plot partial dependence are not the predicted probabilities of the class, but their log-odds defined as log(p / (1 - p)).

The plot also displays the distribution of the feature, so that you can determine whether there is sufficient data to interpret the relationship between the feature and target.

Subpopulation analysis¶

For regression and binary classification models trained in Python (e.g., scikit-learn, keras, custom models), Dataiku can compute and display subpopulation analyses. These can help you to assess if your model behaves identically across subpopulations; for example, for a bias or fairness study.

Subpopulation analyses are a post-hoc analysis that can be computed after a model is built. Since they can be expensive to produce when there are many features, you need to explicitly request these plots on the Subpopulation analysis page of the output.

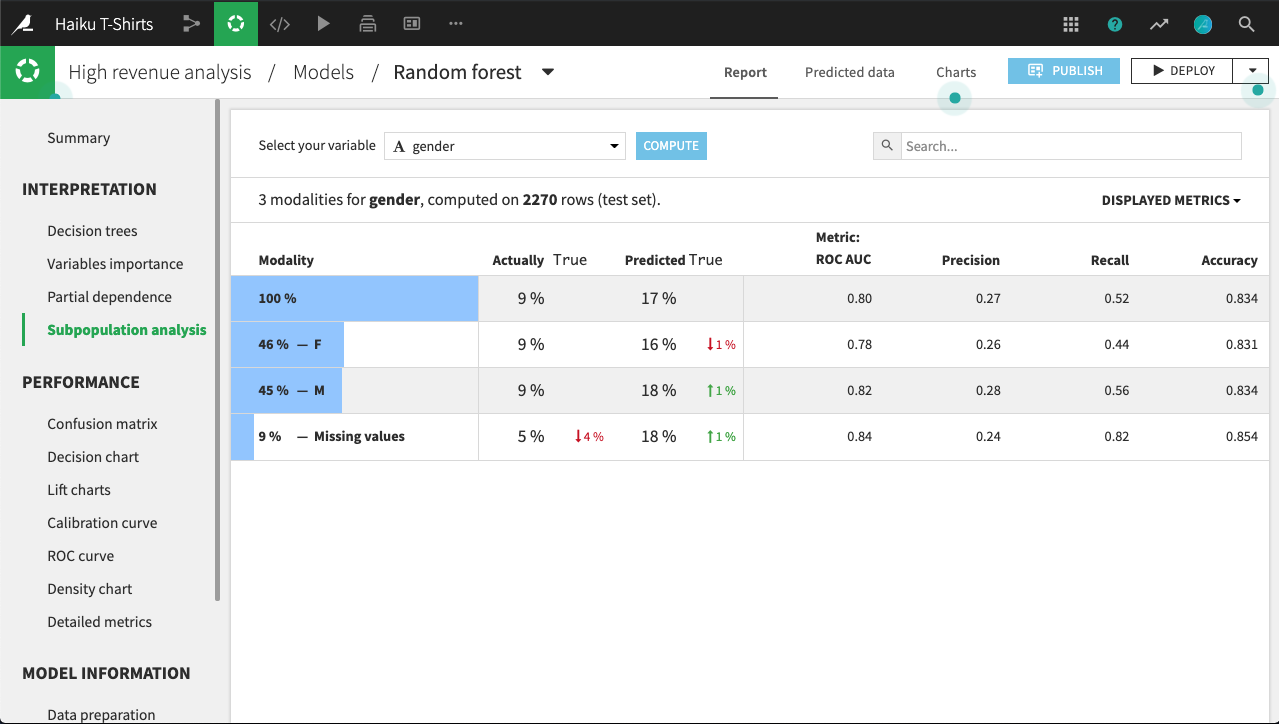

The primary analysis is a table of various statistics that you can compare across subpopulations, as defined by the values of the selected column. You need to establish, for your use case, what constitutes “fair”.

For example, the table below shows a subpopulation analysis for the column gender. The model-predicted probabilities for male and female are close, but not quite identical. Depending upon the use case, we may decide that this difference is not significant enough to warrant further investigation.

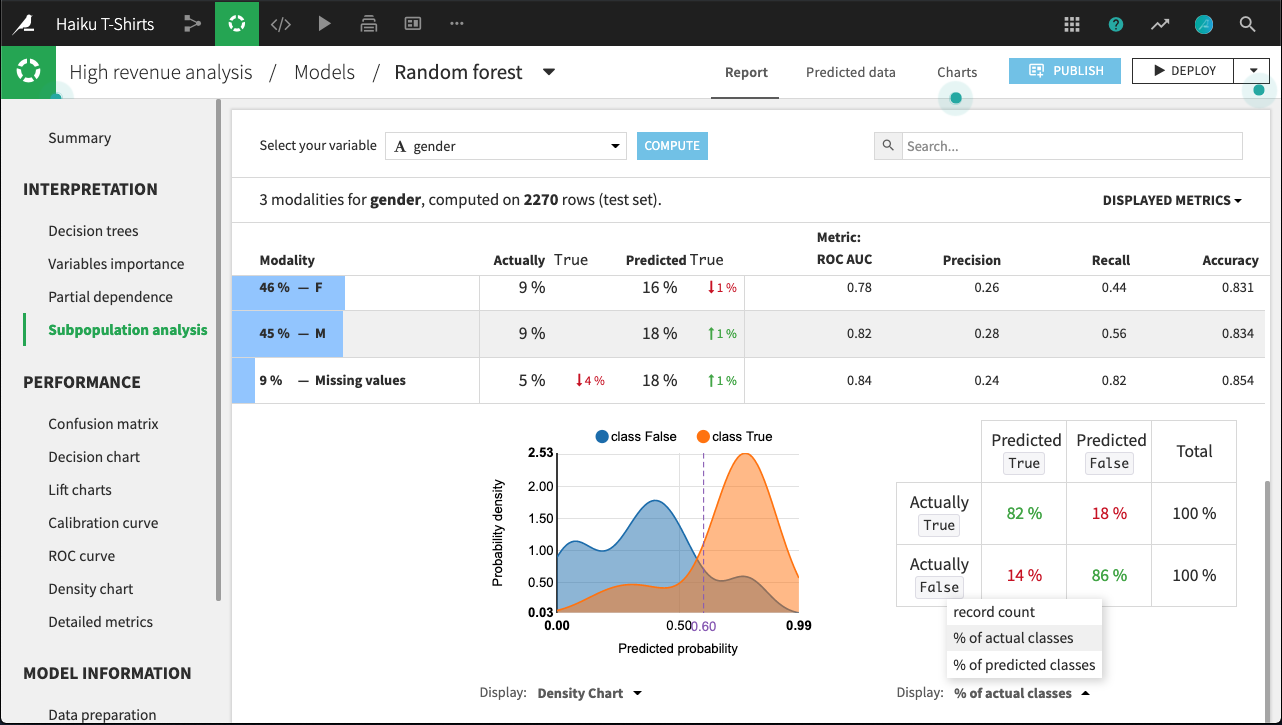

By clicking on a row in the table, we can display more detailed statistics related to the subpopulation represented by that row.

For example, the figure below shows the expanded display for rows whose value of gender is missing. The number of rows that are Actually True is lower for this subpopulation than for males or females. By comparing the % of actual classes view for this subpopulation versus the overall population, it looks like the model does a significantly better job of predicting actually true rows with missing gender than otherwise.

The density chart suggests this may be because the class True density curve for missing gender has a single mode around 0.75 probability. By contrast, the density curve for the overall population has a second mode just below 0.5 probability.

What’s not clear, and would require further investigation, is whether this is a “real” difference, or an artifact of the relatively small subpopulation of missing gender.

Individual explanations of predictions¶

Please see Individual prediction explanations.