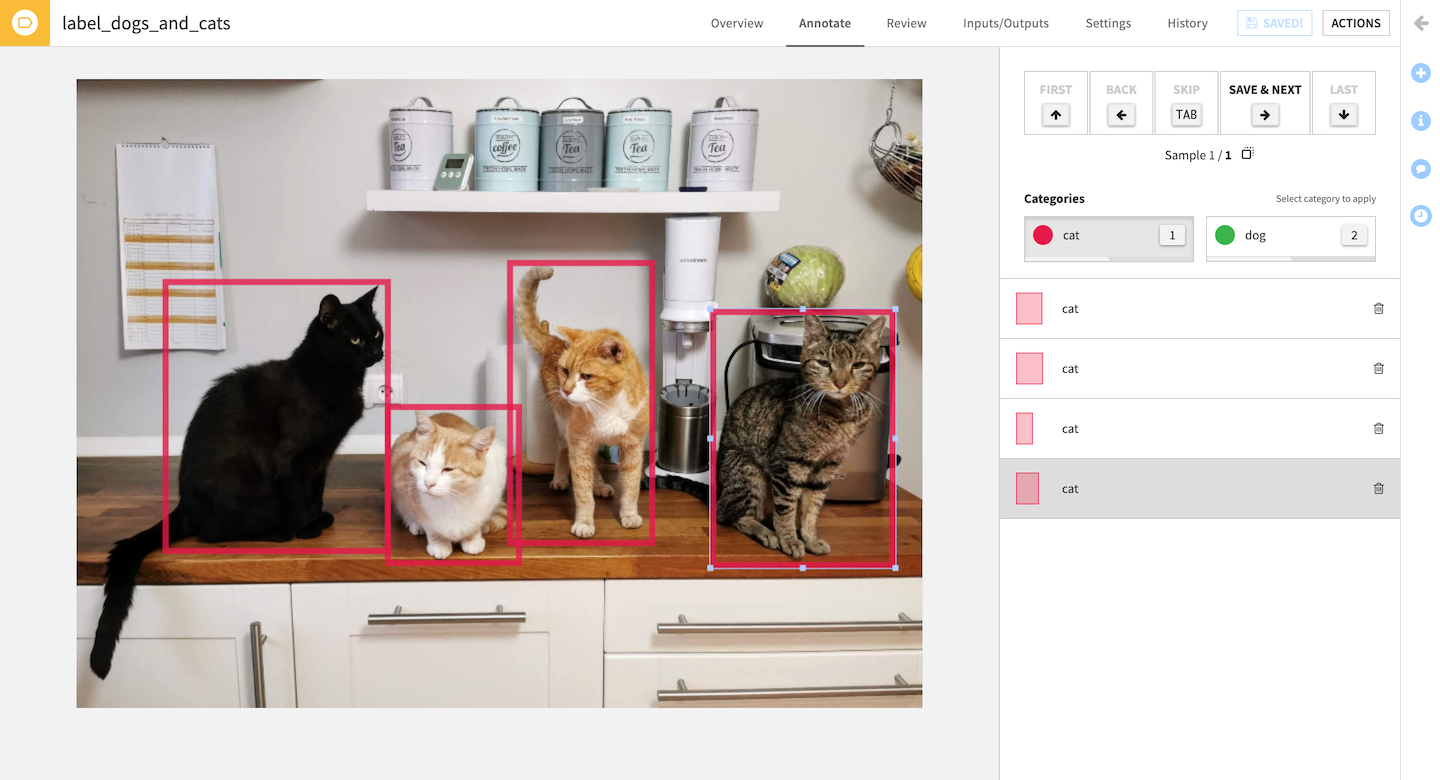

Image labeling¶

Label images collaboratively, for example to then train a supervised computer vision model.

Image labeling supports two use cases:

Image classification (one class per image)

Object detection (multiple bounding boxes per image, each with a class)

Labeling Task¶

To label your data you need to create a Labeling Task. It takes two mandatory inputs:

a Managed Folder containing the images

a Dataset with a column containing the paths to the images in the folder. It can be easily created from the above managed folder using the List Folder Contents recipe

To use the task, you also need to specify:

which column in the input Dataset contains the paths to the images

the target classes

The overview tab lets Reviewers follow the progress of the Annotators and of the task globally.

Review¶

Multiple users (Annotators) can work on the same Labeling task and annotate data. To limit errors in annotations, you can ask for a minimum number of annotators to label the same image. During the review process, a Reviewer can validate that the annotations are correct and arbitrate on conflicting annotations.

Conflicts happen when several Annotators label the same record differently. For instance:

in image classification, two Annotators selected different classes for the same image

in object detection, two bounding boxes from two Annotators don’t overlap enough, or don’t have the same class.

To solve a conflict, the reviewer can pick one correct annotation (discarding the others), or discard all and provide an authoritative annotation directly (which will then be considered as already validated).

To speed up the review process, a Reviewer can “Auto Validate” all the records with enough annotations and that don’t have any conflict.

Permissions¶

There are different access levels on a Labeling Task:

View: a user or group can access the basic information in the labeling task

Annotate: View + can annotate data

Review: Annotate + can review annotations

Read configuration: View + read all the information in the labeling task

Manage: Read + Review + can edit the settings of the labeling task

Users and groups with Write project content access to a Project are implicitly granted Manage access on all labeling tasks in that project. Likewise, users and groups with Read project content access to a project are implicitly granted Read configuration access on all labeling tasks in that project.

Ownership of the task confers Manage access.

Additional Annotate or Review permissions can be granted on each specific task (in its Settings > Permissions tab). Note that users will need to be granted at least Read dashboards permission on the parent Project to be able to access the Labeling task (e.g. as Annotator or Reviewer).

Output: Labels Dataset¶

The Labels Dataset is the output of a Labeling Task. You can choose between two modes:

only validated annotations (default): the dataset will show only the annotations that have been verified by a reviewer

all annotations: the dataset will show all the annotations

This Dataset is a view of the annotations, it does not need to be (re)built.