Evaluating DSS models¶

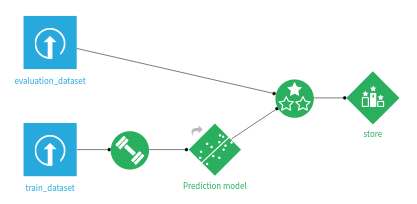

To evaluate a DSS model, you must create an Evaluation recipe.

An Evaluation recipe takes as inputs:

an evaluation dataset

a model

An Evaluation Recipe can have up to three outputs:

an Evaluation Store, containing the main Model Evaluation and all associated result screens

an output dataset, containing the input features, prediction and correctness of prediction for each record

a metrics dataset, containing just the performance metrics for this evaluation (i.e. it’s a subset of the Evaluation Store)

Any combination of those three outputs is valid.

Each time the evaluation recipe runs, a new Model Evaluation is added into the Evaluation Store.

Note

This applies both to models trained using DSS visual machine learning and imported MLflow models

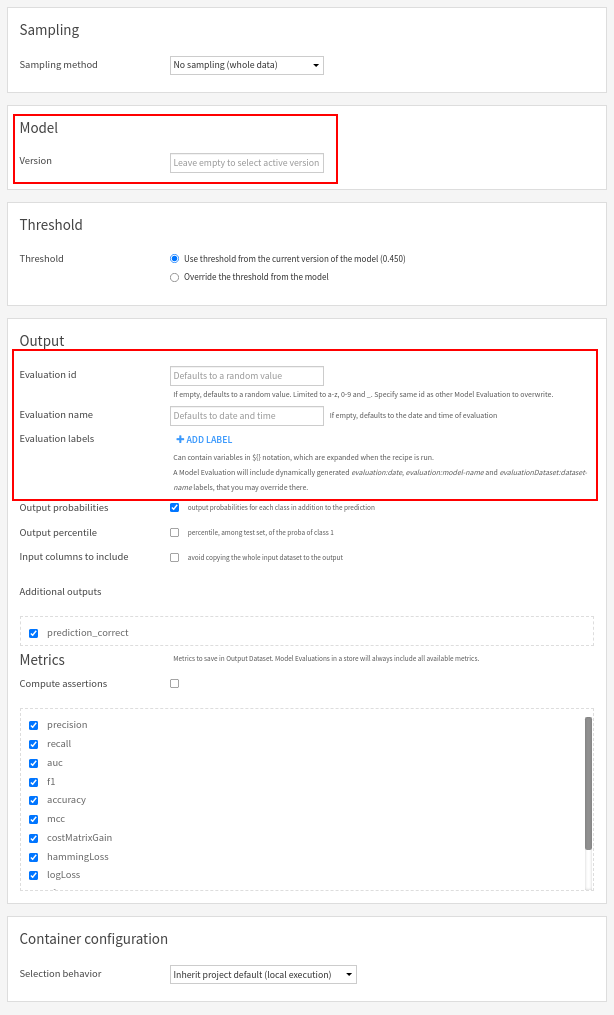

Configuration of the evaluation recipe¶

In this screen, you can:

Select the model version to evaluate. Default is the active version.

Set the human readable name of the evaluation. Defaults to date and time.

(Advanced) Override the unique identifier of the new Model Evaluation in the output Evaluation Store. Should only be used if you want to overwrite an evaluation. Default is a random string.

Other items in the Output block relate to the output dataset. The Metrics block controls what is output in the metrics dataset.

Labels¶

Both the Evaluation Recipe and Standalone Evaluation Recipe allow the definition of labels that will be added to the computed Model Evaluation. Those labels may be useful to implement your own semantics. See Analyzing evaluation results for additional information.

Limitations¶

The model must be a non-partitioned classification or regression model

Non-tabular MLflow inputs are not supported

Computer vision models are not supported

Deep Learning models are not supported

Time series forecasting models are not supported