Rebuilding Datasets¶

When you make changes to recipes within a Flow, or there is new data arriving in your source datasets, you will want to rebuild datasets to reflect these changes. There are multiple options for propagating these changes.

Build¶

This option rebuilds a dataset based upon changes to rows of upstream datasets. Right-click on the dataset and select Build; then select one of the following options:

Non recursive (Build only this dataset) builds the selected dataset using its parent recipe. This option requires the least computation, but does not take into account any upstream changes to datasets or recipes.

Recursive determines which recipes need to be run based on your choice:

Smart reconstruction checks each dataset and recipe upstream of the selected dataset to see if it has been modified more recently than the selected dataset. Dataiku DSS then rebuilds all impacted datasets down to the selected one. This is the recommended default.

Forced recursive rebuild rebuilds all of the dependencies of the selected datasets going back to the start of the flow. This is the most computationally-intense operation, but can be used for overnight builds to start the next day with a double-checked and up to date flow.

DEPRECATED “Missing” data only is a very specific and advanced mode that you’re unlikely to need. It works a bit like Smart reconstruction, but a dataset needs to be (re)built only if it’s completely empty. This is deprecated and not recommended for general usage

Note

In Smart Reconstruction mode, DSS checks all datasets and recipes upstream and datasets are considered outdated and will be rebuilt if any of the below are true:

A recipe upstream has been modified. If this is the case, its output dataset is considered out-of-date.

The settings of a dataset upstream have changed.

the build date of the dataset is more recent than the last known build date.

- For external datasets (datasets not managed by DSS):

File based datasets: Check the marker file if there is one; otherwise, check if the content of the dataset has changed. DSS uses filesystem metadata to identify if there has been a change in the file list (e.g. files added/removed) or any files themselves (e.g. size, last modification date).

For SQL datasets: DSS considers it up-to-date by default, as it is difficult to know if there is a change in an input SQL dataset.

Known limitation: Smart reconstruction can’t take into account variable change in visual recipe; you have to force a rebuild.

Preventing a Dataset from being built¶

You might want to prevent some datasets from being rebuilt, for instance, if rebuilding them is particularly expensive or if their unavailability must be restricted to certain hours. In a dataset’s Settings > Advanced tab, you can configure its Rebuild behavior:

Normal: the dataset can be rebuilt, including recursively in the cases described above.

Explicit: the dataset can be rebuilt, but not recursively when rebuilding a downstream dataset.

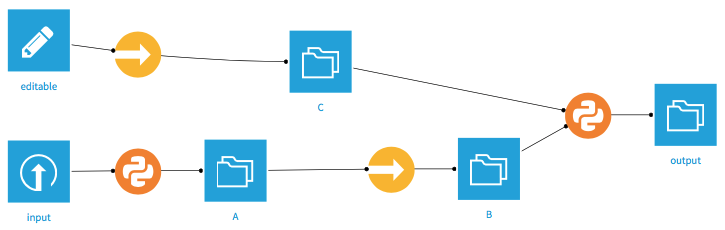

Given a flow such as the following:

If B is set as Explicit rebuild, building Output recursively, even with Forced-recursive, will only rebuild C (and Output). B will not be rebuilt, nor will its upstream datasets.

To rebuild B, you need to build it explicitly, e.g. by right-clicking it and choosing Build. This also holds true when Output is built from a Scenario or via an API call.Write-protected: the dataset cannot be rebuilt, even explicitly, making it effectively read-only from the Flow’s perspective. You can still write to this dataset from a Notebook.

Build Flow outputs reachable from here¶

This option rebuilds datasets downstream of a recipe or dataset, based upon changes to rows of datasets. Right-click on a recipe or dataset and select Build Flow outputs reachable from here; then select a method for handling dependencies (see the descriptions above).

Dataiku DSS starts from the selected dataset and walks the flow downstream, following all branches, to determine the terminal datasets at the “end” of the flow.

For each terminal dataset, DSS builds it with the selected option

This means that the selected dataset has no special meaning; it may or may not be rebuilt, depending on whether or not it is out of date with respect to the terminal datasets.

Propagate schema across Flow from here¶

This option propagates changes to the columns of a dataset to all downstream datasets. The schema can have changed either because the columns in the source data have changed or because you have made changes to the recipe that creates the dataset. To do this, right-click a recipe or dataset and select Propagate schema across Flow from here.

This opens the Schema Propagation tool. Click Start to begin manual schema propagation, then for each recipe that needs an update:

Open it, preferably in a new tab

Force a save of the recipe: hit Ctrl+s, (or modify anything, click Save, revert the change, save again). For most recipe types, saving it triggers a schema check, which will detect the need for an update and offer to fix the output schema. DSS will not silently update the output schema, as it could break other recipes relying on this schema. But most of the time, the desired action is to accept and click Update Schema.

You probably need to run the recipe again

Some recipe types cannot be automatically checked; for example, Python recipes.

Some options in the tool allow you to automate schema propagation:

Perform all actions automatically: when selected, schema propagation will automatically rebuild datasets and recipes to achieve full schema propagation with minimal user intervention.

Perform all actions automatically and build all output datasets afterwards: As the previous option, plus a standard recursive Build All operation invoked at the end of the schema propagation.

Note

If your schema changes would break the settings of a downstream recipe, then you will need to manually fix the recipe and restart the automatic schema propagation. For example, if you manually selected columns to keep in a Join recipe (rather than automatically keeping all columns), and then delete one of those selected columns in an upstream Prepare recipe, then automatic schema propagation will fail at the Join recipe.

Advanced Options¶

Rebuild input datasets of recipes whose output schema may depend on input data (prepare, pivot): If this option is selected, then the schema propagation will automatically rebuild datasets as necessary to ensure that the output schemas from the recipe can be correctly calculated. This is particularly important for Pivot recipes and Prepare recipes with pivot steps. If you have no such recipes it may faster to deselect this.

Rebuild output datasets of recipes whose output schema is computed at runtime: Selecting this will ensure the rebuild of:

all code recipes

Pivot recipes which are set to “recompute schema on each run” in the Output step

Schemas for Prepare recipes should update automatically even if this is not selected.